OpenAI finds itself at the mercy of something very like an extortion racket. To keep pace in the cutthroat artificial-intelligence industry, it might have to hand politicians a veto over product design and shovel millions—if not billions—into activist causes. This is a shame. But OpenAI has only itself to blame.

The trouble goes back to the company’s founding. In 2015, the AI world was thick with effective altruists (EAs) and rationalists—left-leaning nerds bent on using abstract utilitarian formulas to pursue a centrally planned society. To recruit these idealists, OpenAI’s founders devised a convoluted structure: a nonprofit, pledged to “benefit humanity,” that would eventually take control of a for-profit subsidiary.

Finally, a reason to check your email.

Sign up for our free newsletter today.

It was a deal with the devil—with the devil working, as is his wont, through people convinced they’re angels. As OpenAI racked up breakthroughs in large language models, some of its own employees tried to slow progress and delay launches. Though done in the name of “humanity,” this obstruction was thoroughly elitist: only the EAs themselves, not ordinary people, could be trusted with AI.

After failing to slow things down, some left in protest (and promptly started their own firm). But many stayed. Peter Thiel, an early investor, warned CEO Sam Altman that “the AI safety people” would destroy the firm.

They nearly did. In late 2023, OpenAI’s nonprofit board tried to fire Altman. The surface complaint was his supposed lack of candor. The deeper concern was his fitness to lead mankind into the future. When warned that without Altman, OpenAI could collapse, board member Helen Toner coolly replied: “That would actually be consistent with the mission.”

She was not wrong. The board served not investors or employees but “humanity”—meaning no one in particular, and nothing beyond their own moral hunches.

The board’s move was remarkably naïve. They assumed Altman could be easily replaced. Instead, public pressure swelled, employees threatened to depart en masse, and the board retreated. Altman returned. Inside the company, his brief exile became known as “the blip.”

Naturally, the blip rattled investors. As journalist Keach Hagey observes in her recent biography of Altman, they “were just not going to fund a company that could self-destruct as easily as OpenAI showed that it could.” So Altman set out to do two things: “root[] out the EAs” and “remak[e]” OpenAI’s corporate structure into “something a lot less weird.”

The purge was easy enough. The failed coup had made clear who ruled OpenAI, and Altman quickly restocked the board with sober adults. Larry Summers, for one, is unlikely to re-fire Altman, having convinced himself that AI is about to kill us all.

The restructuring has been much harder. For starters, OpenAI appears to have cut a deal with Microsoft, its largest backer, to convert the Big Tech firm’s bespoke “future profit participation” rights into conventional equity.

That means re-valuing Microsoft’s holdings—an immensely complex task—while avoiding antitrust scrutiny over its new, more direct ownership stake. The two sides signed a “non-binding memorandum of understanding,” which could mean that they are close—or just that they are posturing to calm nerves.

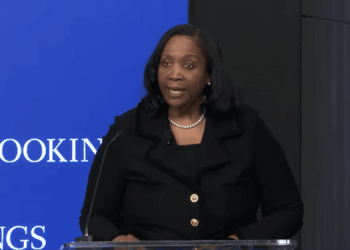

An even bigger obstacle lies with two state attorneys general. OpenAI is incorporated in Delaware, and its principal place of business is California. Both states wield sweeping power over nonprofits. Both can decide whether OpenAI is staying true to its mission, and both can attach conditions to any restructuring.

California attorney general Rob Bonta has seized the opportunity to denounce and investigate OpenAI, hoping to bend it to his political will. “We have been particularly focused,” he has declared, “on ensuring rigorous and robust oversight of OpenAI’s safety mission.”

That statement could have come from the mouth of an EA. In a sense, it did: Bonta is under constant pressure from activists and community groups, whose open letters bear the fingerprints, and often the signatures, of the very AI doomers once prevalent at OpenAI.

Bonta has leaned especially hard on the case of Adam Raine, a teenager who took his own life last April after extensive use of ChatGPT. The chatbot repeatedly urged him to seek help, but he evaded its safeguards and coaxed it into assisting his suicide.

OpenAI swiftly rolled out changes to prevent a recurrence. But Bonta seized on the tragedy to assert control over ChatGPT’s design. Insisting that Raine’s death was “avoidable,” Bonta has pressed OpenAI to implement age verification and made other “requests about OpenAI’s . . . safety precautions and governance.”

The firm has scrambled to respond. It diluted its restructuring plan, abandoning a clean split between nonprofit and for-profit arms. Instead, the nonprofit will retain control over a public benefit corporation charged with balancing investor returns against the putative needs of humanity.

The company also convened a commission of “community leaders” to advise on the nonprofit’s future. The group issued its report in July, and OpenAI responded by announcing a $50 million fund for civil-society and community groups. These will busy themselves with projects (in the report’s phrasing) to close “the economic opportunity gap” and foster “relational empathy” with the “historically excluded.”

OpenAI must raise colossal sums to build data centers for training and inference. Investor appetite seems insatiable. SoftBank has conditioned $20 billion on a successful restructuring, but many billions more are flowing in, apparently without such strings attached. OpenAI might continue to thrive—or the whole AI house of cards might collapse—regardless of what California does.

Either way, the drama exposes the folly of claiming to serve “humanity” in the abstract. The board members who tried to depose Altman couldn’t run a single company, let alone steward the human race.

The activists with fresh access to OpenAI’s resources, meantime, cannot wait to funnel cash to their favored NGOs. Show me who gets to decide what “benefits humanity,” and I’ll show you who’s about to benefit at humanity’s expense.

If Bonta succeeds in blunting OpenAI’s ingenuity, or in turning the firm into yet another politicized nonprofit, it will be because OpenAI let the barbarians through the gate.

When a free and pluralistic society lets private actors compete to build better things, the result is imperfect but ever-increasing prosperity. When central planners claim the power to save the world, the result is corruption, coercion, and misery—every time.

Photo by Andrew Caballero-Reynolds – Pool/Getty Images

Source link