Here’s an open secret: the U.S. government collects vast amounts of data on every person living in the country. If you bank, use transportation, walk outside your home, pay taxes, or do any regulated activity from fishing to investing, a vault exists with your name on it. There’s a reason that going “off the grid” is an all-encompassing lifestyle choice: it’s hard not to radiate your every action in front of the government’s panopticon.

But these data are fragmented across local, county, state, and federal governments, as well as dozens of disparate agencies—none of which knows how to cooperate. At a recent meetup I hosted, a former top data director at the Pentagon described the Kafkaesque process it took just to create standardized data interchange formats to integrate two databases. Multiply that task by every agency, and that sums up the government’s big-data problem.

Finally, a reason to check your email.

Sign up for our free newsletter today.

Civil libertarians might cheer, but relying on government incompetence isn’t a good way to protect civil rights. Thus, I’m puzzled by the extraordinary level of vitriol—from both the Left and the Right—directed toward the Trump administration’s efforts to update the government’s IT stack via contracts with the data-analysis firm Palantir.

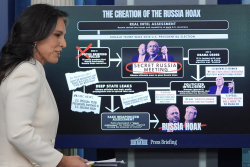

The critics’ main thrust is that a unified system would put the country on a slippery slope to a communist Chinese-style social-credit system. On the right, in particular, the backlash combines an intense hatred of the “deep state” with a seemingly contradictory desire for that very same deep state to fulfill Trump’s policies.

Few of the critics talk about what improving the government’s databases would actually do: simplify tax payments; massively speed up benefits applications; improve criminal-justice efficiency and accuracy; and uncover waste, fraud, and abuse. All of these activities are quotidian in the private sector, but for some reason verboten in the public sector. As I wrote last summer in City Journal on adding more AI into government processes:

Rather than enter a technological cul-de-sac, federal, state, and local governments must stay competitive with the private sector’s best practices. That means taking more humans out of the decision-making loop. Humans and machines are both ultimately black boxes; decision systems can be intentionally designed for optimal transparency and due process. Far from a computational dictator usurping the powers of free citizens, AI, properly implemented, is just another extension of a well-functioning republic.

I realize we’re not a well-functioning republic, so many hope to cordon the authorities off from our lives as much as possible. But the U.S. government is here to stay. Better to upgrade not just our data infrastructure but the institutions that manage it, too.

What would a more credible system look like? I just recorded a podcast episode with the technologist Joel Burke about his new book Rebooting a Nation, which chronicles the rise of Estonia’s digital government. How did the citizenry of a former Soviet republic once terrorized by secret police allow its newly democratic government to combine so many disparate data sources together into a mostly unified whole?

The answer, essentially, is transparent legibility. Decisions about data were made deliberately and openly, encouraging citizen participation. Robust privacy controls and clear notifications were carefully designed to give people the sense that they had more control over their data than ever before. Estonia’s digital government didn’t appear overnight; it gradually incorporated more data sources through a lengthy transition. Early gains proved useful, helping to build public support for continued expansion.

In short, the country gained both efficiency and privacy through its digitalization efforts. We shouldn’t be surprised by this outcome; keeping everything on paper is hardly a guarantee of either goal.

One crucial difference between Estonia and America: the U.S. government lacks the capability to do this sort of data engineering and so must outsource it to the private sector in the form of Palantir. That’s a typical issue with American state capacity, particularly in technology.

It’s worth debating whether the government should have this capability in-house, but the reality is that it doesn’t. No agency is currently capable of doing this work, since no agency has the political wherewithal or the workforce to cut through endless debates on interchange formats and just deliver an excellent product.

That leaves Palantir as almost the only possible provider of these services. For activists on both left and right, Palantir, its CEO Alex Karp, and its founder and board member Peter Thiel seem to represent an unholy trinity of some kind. Peel off the layers of invective and counter-invective, though, and the controversy shows itself to be all smoke but no fire. Palantir is a full-stack data software and consulting firm that integrates databases and offers visualization and analysis functions on demand. It’s a good product, but hardly the sort of Orwellian super-system its detractors imagine.

Yet, criticism along these lines has remained unchanging for more than a decade. No one sees Palantir as a business like any other. Instead, activists have turned it into the political linchpin on whether the government should use data effectively or not.

I believe that government—constrained by careful privacy protections and transparent due process procedures—should be able to offer much more robust and efficient services by leveraging its massive data to benefit me and everyone else. So yes, I want Palantir to munch all of my public data.

Unlike most activists, I have zero faith that keeping data lying dormant in a vault will protect me. That’s a passive model of privacy. Only through active efforts to create robust institutions can we be assured that the government’s powers will be properly circumscribed.

Where to draw the line and how to build such institutions are worthy subjects for debate. Unfortunately, data privacy is one of the hardest policies to tackle in Washington. Proposals are almost always seen as direct attacks on the digital advertising industry, which earns tens of billions annually and spends heavily on lobbying at both the federal and state levels. Government privacy rules are similarly stalled, for fear that momentum might shift toward regulating the private sector as well.

This lethargy must be overcome. Centralized databases are coming to government, and so is robust artificial intelligence. It’s inevitable that, for instance, small claims courts will adjudicate decisions algorithmically in the relatively near future, even just as triage. If you don’t believe me, consider how AI will help litigants file lawsuits faster and with more documentation. The courts will need to automate just as much as their petitioners.

Poorly designed databases don’t protect us from government, they just result in bad service delivery, which intensifies the sense among U.S. citizens that their government can’t do anything well. We need to go in the opposite direction.

The word “state” and the word “statistics” descend from the same Latin root. Bad statistics means bad government, as has been true since the dawn of civilization. The panopticon already exists—let’s get some benefit from it.

Photo by STR/NurPhoto via Getty Images

Source link